mac安装hodoop

1.确保安装jdk和免密ssh登陆

java -version

Last login: Fri Apr 2 08:22:35 on console

sunyun@sunyundeMacBook-Pro ~ % java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed modesunyun@sunyundeMacBook-Pro ~ % ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/Users/sunyun/.ssh/id_rsa): yes

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in yes.

Your public key has been saved in yes.pub.

The key fingerprint is:

SHA256:IhC+EKalYPyGRU/b8QBMYdtM2zqZYuOiLVDfbFHiNMg sunyun@sunyundeMacBook-Pro.local

The key's randomart image is:

+---[RSA 3072]----+

|+o+ooBo+ |

|=* oE.@ B |

|+ * * O o |

| o.= o + |

| .o..*.*S |

|. +.*.. |

|. . o |

| .o . |

| ... |

+----[SHA256]-----+

sunyun@sunyundeMacBook-Pro ~ % cat ~/.ssh/local.pub >> ~/.ssh/authorized_keys2.安装hadoop

brew install hadoop(推荐), 安装完成后你会看到安装路径在那里- 官网下载压缩包, 解压到你指定的目录, 然后安装(不推荐)

sunyun@sunyundeMacBook-Pro ~ % brew install hadoop

Updating Homebrew...

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/bottles/openjdk-15.

==> Downloading from https://d29vzk4ow07wi7.cloudfront.net/e91cd8028e8bb7415bcb9

######################################################################## 100.0%

==> Downloading https://www.apache.org/dyn/closer.lua?path=hadoop/common/hadoop-

==> Downloading from https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/h

######################################################################## 100.0%

==> Installing dependencies for hadoop: openjdk

==> Installing hadoop dependency: openjdk

==> Pouring openjdk-15.0.1.big_sur.bottle.1.tar.gz

==> Caveats

For the system Java wrappers to find this JDK, symlink it with

sudo ln -sfn /usr/local/opt/openjdk/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk.jdk

openjdk is keg-only, which means it was not symlinked into /usr/local,

because it shadows the macOS `java` wrapper.

If you need to have openjdk first in your PATH run:

echo 'export PATH="/usr/local/opt/openjdk/bin:$PATH"' >> ~/.zshrc

For compilers to find openjdk you may need to set:

export CPPFLAGS="-I/usr/local/opt/openjdk/include"

==> Summary

🍺 /usr/local/Cellar/openjdk/15.0.1: 614 files, 324.9MB

==> Installing hadoop

Error: Your CLT does not support macOS 11.

It is either outdated or was modified.

Please update your CLT or delete it if no updates are available.

Update them from Software Update in System Preferences or run:

softwareupdate --all --install --force

If that doesn't show you an update run:

sudo rm -rf /Library/Developer/CommandLineTools

sudo xcode-select --install

Alternatively, manually download them from:

https://developer.apple.com/download/more/.

Error: An exception occurred within a child process:

SystemExit: exit

sunyun@sunyundeMacBook-Pro ~ % softwareupdate --all --install --force

Software Update Tool

Finding available software

No updates are available.

sunyun@sunyundeMacBook-Pro ~ % sudo rm -rf /Library/Developer/CommandLineTools

Password:

sunyun@sunyundeMacBook-Pro ~ % sudo xcode-select --install

xcode-select: note: install requested for command line developer tools

sunyun@sunyundeMacBook-Pro ~ % brew install hadoop

==> Downloading https://www.apache.org/dyn/closer.lua?path=hadoop/common/hadoop-

Already downloaded: /Users/sunyun/Library/Caches/Homebrew/downloads/764c6a0ea7352bb8bb505989feee1b36dc628c2dcd6b93fef1ca829d191b4e1e--hadoop-3.3.0.tar.gz

🍺 /usr/local/Cellar/hadoop/3.3.0: 21,819 files, 954.7MB, built in 56 seconds配置hadoop-env.sh

sunyun@sunyundeMacBook-Pro hadoop % pwd

/usr/local/Cellar/hadoop/3.3.0/libexec/etc/hadoop添加JAVA_HOME路径

sunyun@sunyundeMacBook-Pro ~ % /usr/libexec/java_home -V

Matching Java Virtual Machines (1):

1.8.0_241 (x86_64) "Oracle Corporation" - "Java SE 8" /Library/Java/JavaVirtualMachines/jdk1.8.0_241.jdk/Contents/Home

/Library/Java/JavaVirtualMachines/jdk1.8.0_241.jdk/Contents/Homeexport JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_241.jdk/Contents/Home

# Mac查看jdk 位置 /usr/libexec/java_home -V配置core-site.xml

配置hdfs地址和端口

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:8020</value>

</property>

<!-- 以下配置可防止系统重启导致NameNode 不能启动-->

<!-- /Users/用户名/data 这个路径你可以随便配置, hadoop必须有权限-->

<property>

<name>hadoop.tmp.dir</name>

<value>/Users/用户名/data/hadoop/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<!-- DataNode存放块数据的本地文件系统路径 -->

<property>

<name>dfs.name.dir</name>

<value>/Users/用户名/data/hadoop/filesystem/name</value>

<description>Determines where on the local filesystem the DFS name node should store the name table. If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. </description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/Users/用户名/data/hadoop/filesystem/data</value>

<description>Determines where on the local filesystem an DFS data node should store its blocks. If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. Directories that do not exist are ignored.</description>

</property>

</configuration>配置mapred-site.xml

配置mapreduce中jobtracker的地址和端口. 3.1.1版本下有这个文件, 可直接配置

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>配置yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>格式化HDFS

# /usr/local/Cellar/hadoop/3.1.1/libexec

bin/hdfs namenode -format运行

- sbin/start-all.sh: 启动全部

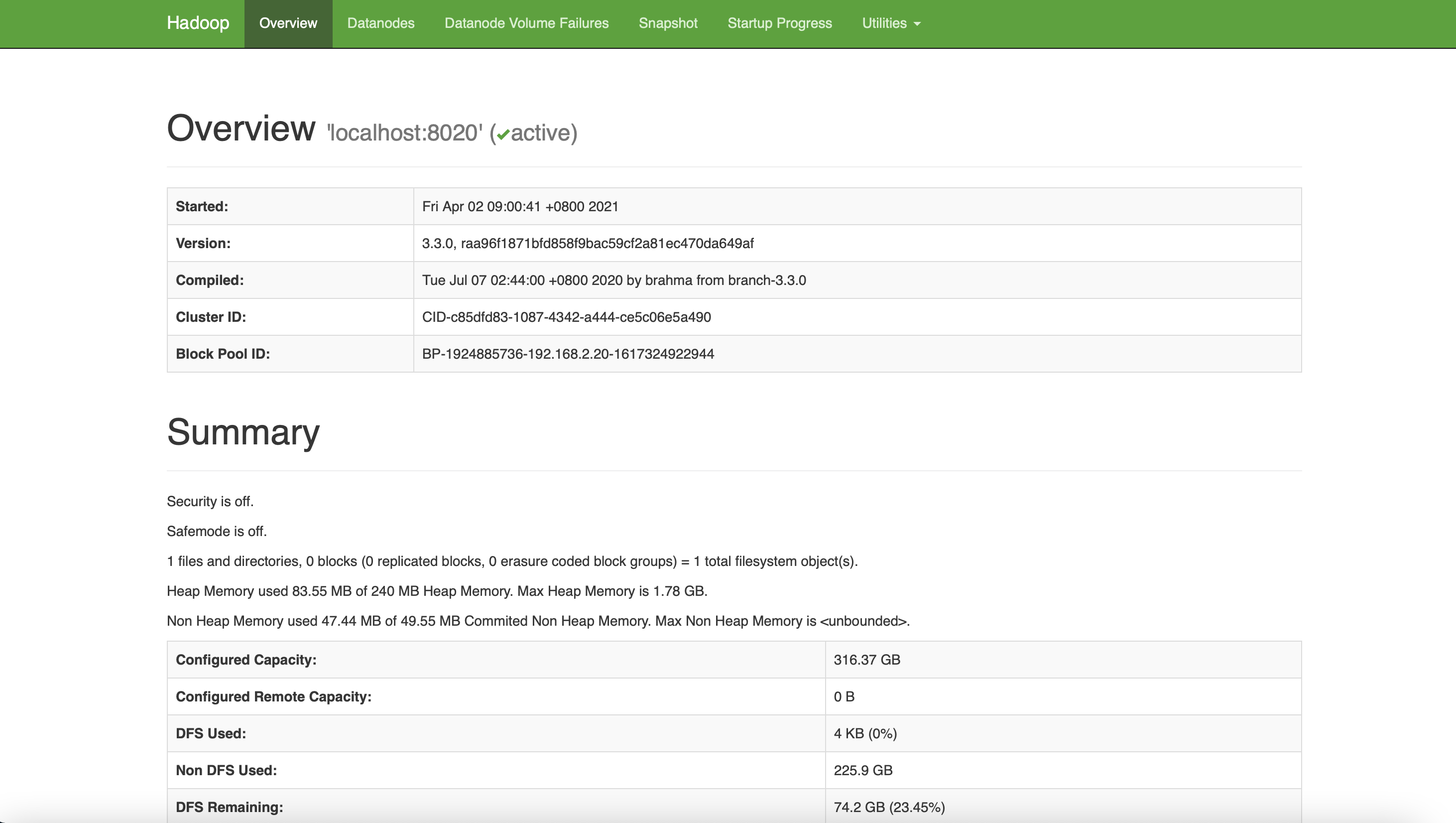

- sbin/start-dfs.sh: 启动NameNode和DataNode, NameNode - http://localhost:9870 (2.7的版本端口为50070)

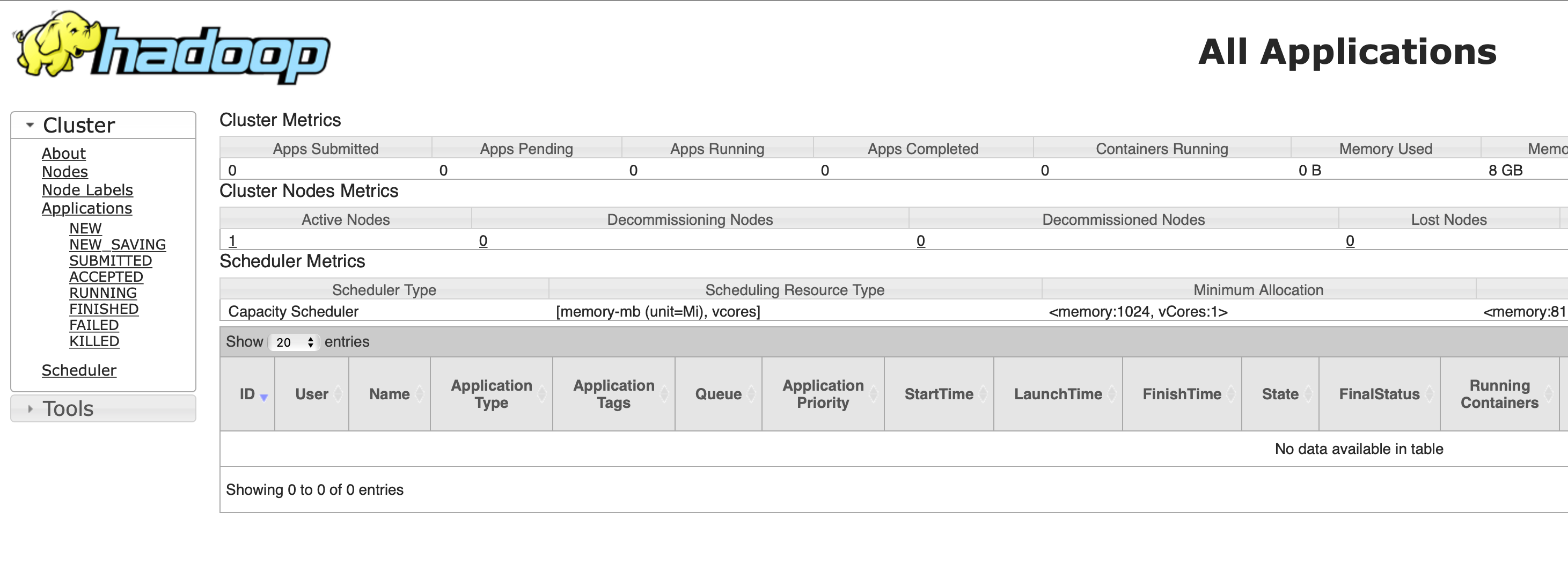

- sbin/start-yarn.sh: ResourceManager - http://localhost:8088 (All Applications)

jps 可以查看进程

jps

# 34214 NameNode

# 34313 DataNode

# 34732 NodeManager

# 34637 ResourceManager

# 34446 SecondaryNameNode

# 34799 Jps3.安装hive

下载安装

brew install hivesunyun@sunyundeMacBook-Pro ~ % brew install hive

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/bottles/libpng-1.6.37.big_sur.b

######################################################################## 100.0%

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/bottles/freetype-2.10.4.big_sur

######################################################################## 100.0%

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/bottles/openjdk%408-1.8.0%2B282

######################################################################## 100.0%

==> Downloading https://www.apache.org/dyn/closer.lua?path=hive/hive-3.1.2/apache-hive-3.1.2

==> Downloading from https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-3.1.2/apache-hive

######################################################################## 100.0%

==> Installing dependencies for hive: libpng, freetype and openjdk@8

==> Installing hive dependency: libpng

==> Pouring libpng-1.6.37.big_sur.bottle.tar.gz

🍺 /usr/local/Cellar/libpng/1.6.37: 27 files, 1.3MB

==> Installing hive dependency: freetype

==> Pouring freetype-2.10.4.big_sur.bottle.tar.gz

🍺 /usr/local/Cellar/freetype/2.10.4: 64 files, 2.3MB

==> Installing hive dependency: openjdk@8

==> Pouring openjdk@8-1.8.0+282.big_sur.bottle.tar.gz

==> Caveats

For the system Java wrappers to find this JDK, symlink it with

sudo ln -sfn /usr/local/opt/openjdk@8/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk-8.jdk

openjdk@8 is keg-only, which means it was not symlinked into /usr/local,

because this is an alternate version of another formula.

If you need to have openjdk@8 first in your PATH run:

echo 'export PATH="/usr/local/opt/openjdk@8/bin:$PATH"' >> ~/.zshrc

For compilers to find openjdk@8 you may need to set:

export CPPFLAGS="-I/usr/local/opt/openjdk@8/include"

==> Summary

🍺 /usr/local/Cellar/openjdk@8/1.8.0+282: 742 files, 193.0MB

==> Installing hive

==> Caveats

If you want to use HCatalog with Pig, set $HCAT_HOME in your profile:

export HCAT_HOME=/usr/local/opt/hive/libexec/hcatalog

==> Summary

🍺 /usr/local/Cellar/hive/3.1.2_3: 1,126 files, 229.6MB, built in 10 seconds

==> Caveats

==> openjdk@8

For the system Java wrappers to find this JDK, symlink it with

sudo ln -sfn /usr/local/opt/openjdk@8/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk-8.jdk

openjdk@8 is keg-only, which means it was not symlinked into /usr/local,

because this is an alternate version of another formula.

If you need to have openjdk@8 first in your PATH run:

echo 'export PATH="/usr/local/opt/openjdk@8/bin:$PATH"' >> ~/.zshrc

For compilers to find openjdk@8 you may need to set:

export CPPFLAGS="-I/usr/local/opt/openjdk@8/include"

==> hive

If you want to use HCatalog with Pig, set $HCAT_HOME in your profile:

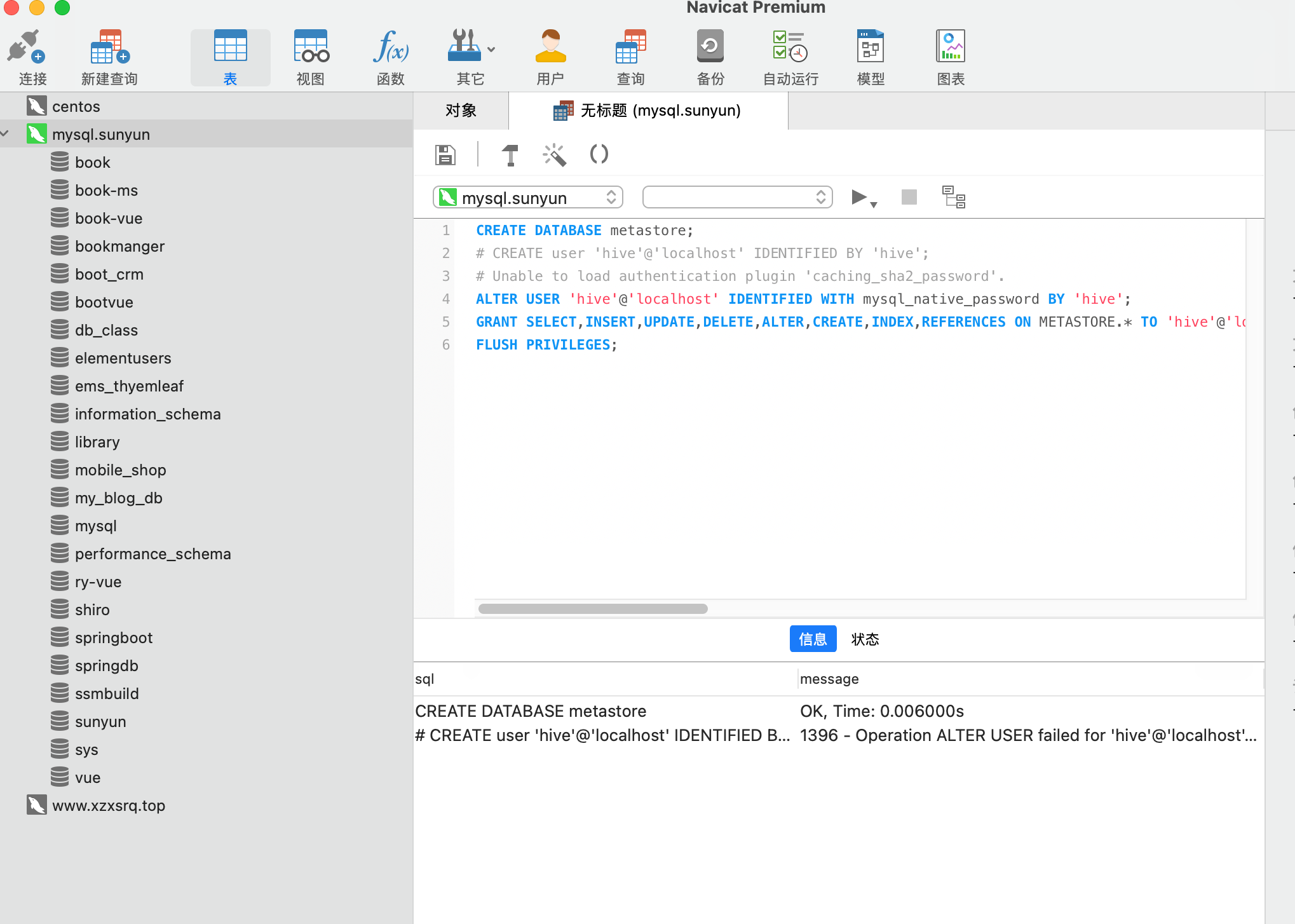

export HCAT_HOME=/usr/local/opt/hive/libexec/hcatalog配置Hive元数据库

Hive默认用derby作为元数据库这, 我们这里换用大家熟悉的mysql来存储元数据

# 进入数据库

mysql -uroot -p

# 在数据库执行

CREATE DATABASE metastore;

# CREATE user 'hive'@'localhost' IDENTIFIED BY 'hive';

# Unable to load authentication plugin 'caching_sha2_password'.

ALTER USER 'hive'@'localhost' IDENTIFIED WITH mysql_native_password BY 'hive';

GRANT SELECT,INSERT,UPDATE,DELETE,ALTER,CREATE,INDEX,REFERENCES ON METASTORE.* TO 'hive'@'localhost';

FLUSH PRIVILEGES;

配置hive

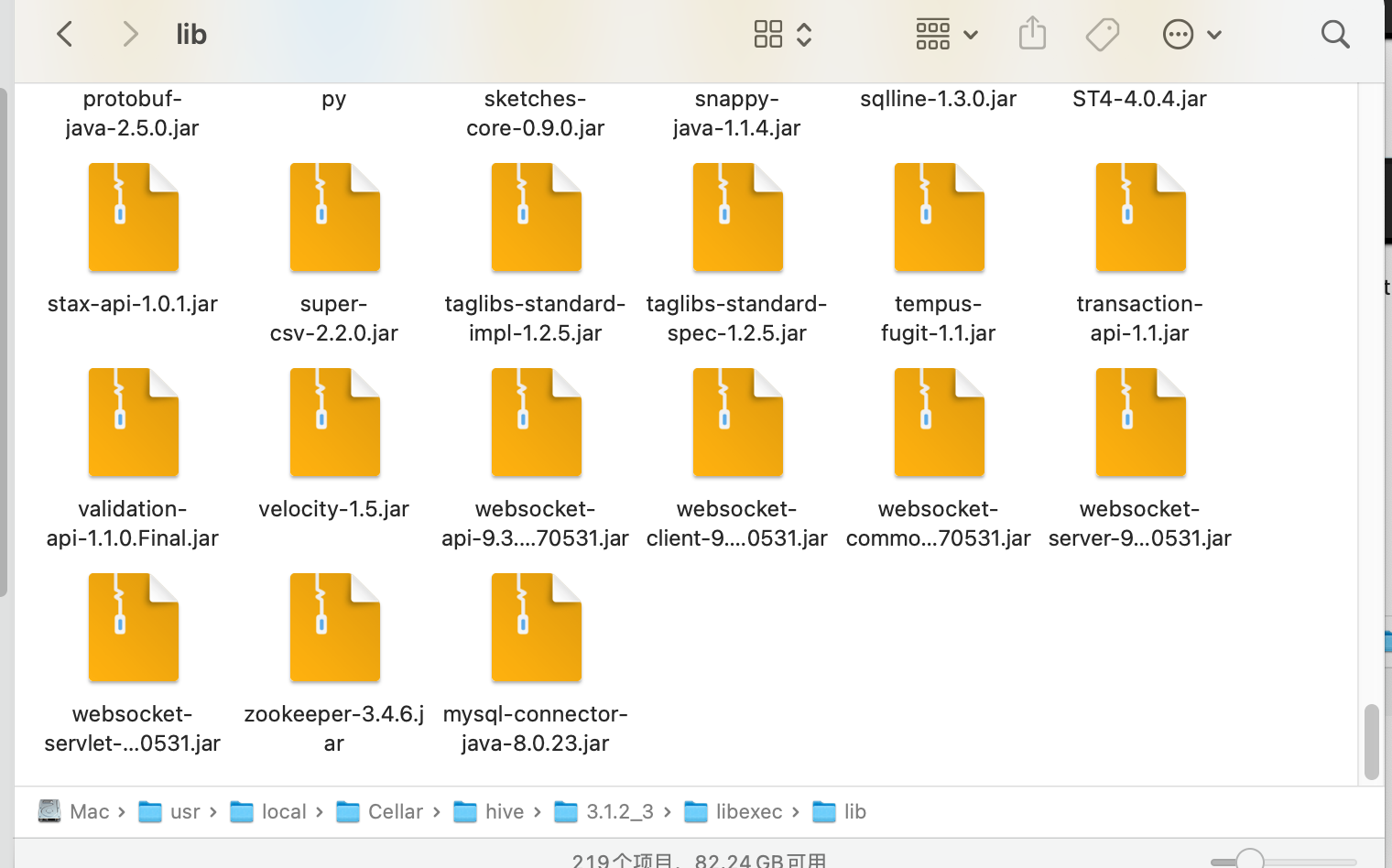

配置mysql-connector jar包

下载地址: https://dev.mysql.com/downloads/connector/j/

将下载的文件解压, 复制

cp mysql-connector-java-5.1.44-bin.jar /usr/local/Cellar/hive/3.1.1/libexec/lib/

配置hive-site.xml

修改以下部分

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost/metastore</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/Users/sunyun/data/hive</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/Users/sunyun/data/hive/querylog</value>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/Users/sunyun/data/hive/download</value>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/Users/sunyun/data/hive/log</value>

</property>注意3210行可能会有�最好删掉, 不然在初始化元数据库会报错

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/Cellar/hive/3.1.1/libexec/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/Cellar/hadoop/3.1.1/libexec/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3210,96,"file:/usr/local/Cellar/hive/3.1.1/libexec/conf/hive-site.xml"]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3003)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460)

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4990)

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5063)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5150)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5098)

at org.apache.hive.beeline.HiveSchemaTool.<init>(HiveSchemaTool.java:96)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:318)

at org.apache.hadoop.util.RunJar.main(RunJar.java:232)

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3210,96,"file:/usr/local/Cellar/hive/3.1.1/libexec/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621)

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491)

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456)

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403)

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369)

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515)

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828)

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123)

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257)

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986)

... 15 more初始化元数据库

schematool -initSchema -dbType mysql现在进入数据库metastore, 可以看到相关表(此处只做部分表展示)

mysql> show tables;

+-------------------------------+

| Tables_in_metastore |

+-------------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| CTLGS |

| DATABASE_PARAMS |

| DB_PRIVS |运行Hive

4.安装spark

brew install apache-sparksunyun@sunyundeMacBook-Pro bin % brew install apache-spark

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/bottles/openjdk%4011-11.0.9.big

==> Downloading from https://d29vzk4ow07wi7.cloudfront.net/610ed0bd964812cdce0f6e1a4b8c06fd8

######################################################################## 100.0%

==> Downloading https://www.apache.org/dyn/closer.lua?path=spark/spark-3.0.1/spark-3.0.1-bin

==> Downloading from https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.0.1/spark-3.0

##O#- #

curl: (22) The requested URL returned error: 404

Trying a mirror...

==> Downloading https://archive.apache.org/dist/spark/spark-3.0.1/spark-3.0.1-bin-hadoop3.2.

######################################################################## 100.0%

==> Installing dependencies for apache-spark: openjdk@11

==> Installing apache-spark dependency: openjdk@11

==> Pouring openjdk@11-11.0.9.big_sur.bottle.tar.gz

==> Caveats

For the system Java wrappers to find this JDK, symlink it with

sudo ln -sfn /usr/local/opt/openjdk@11/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk-11.jdk

openjdk@11 is keg-only, which means it was not symlinked into /usr/local,

because this is an alternate version of another formula.

If you need to have openjdk@11 first in your PATH run:

echo 'export PATH="/usr/local/opt/openjdk@11/bin:$PATH"' >> ~/.zshrc

For compilers to find openjdk@11 you may need to set:

export CPPFLAGS="-I/usr/local/opt/openjdk@11/include"

==> Summary

🍺 /usr/local/Cellar/openjdk@11/11.0.9: 653 files, 297.2MB

==> Installing apache-spark

🍺 /usr/local/Cellar/apache-spark/3.0.1: 1,191 files, 237.4MB, built in 10 seconds

==> Caveats

==> openjdk@11

For the system Java wrappers to find this JDK, symlink it with

sudo ln -sfn /usr/local/opt/openjdk@11/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk-11.jdk

openjdk@11 is keg-only, which means it was not symlinked into /usr/local,

because this is an alternate version of another formula.

If you need to have openjdk@11 first in your PATH run:

echo 'export PATH="/usr/local/opt/openjdk@11/bin:$PATH"' >> ~/.zshrc

For compilers to find openjdk@11 you may need to set:

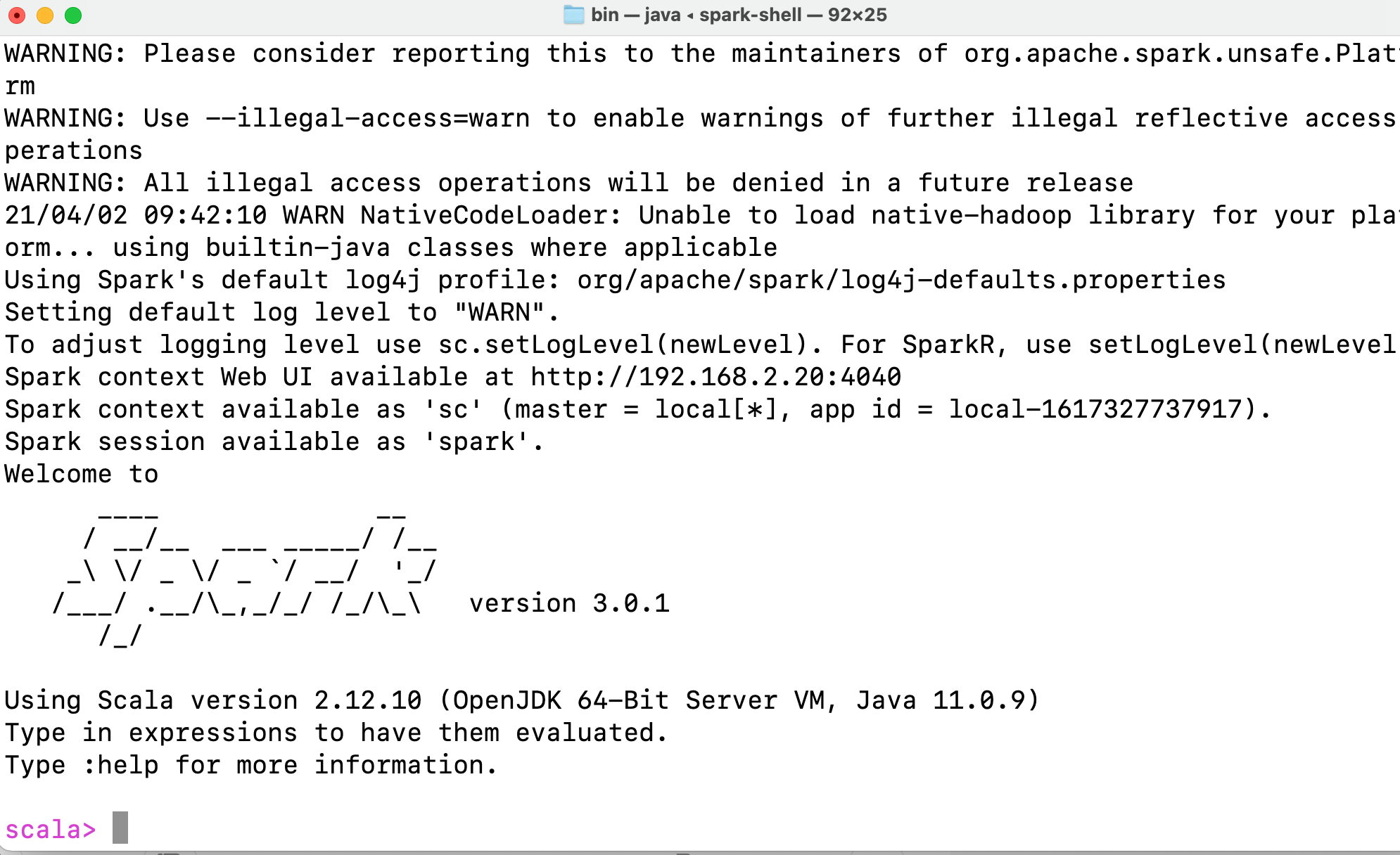

export CPPFLAGS="-I/usr/local/opt/openjdk@11/include"一般直接安装就好了, 然后直接运行spark-shell

遗留问题:配置Hive有问题