title: hadoop中提交python作业

author: fejxc

categories: hadoop

tags:

- 大数据

date: 2021-5-20 09:51:03

问题1

py4j.protocol.Py4JJavaError: An error occurred while calling o20.partitions.

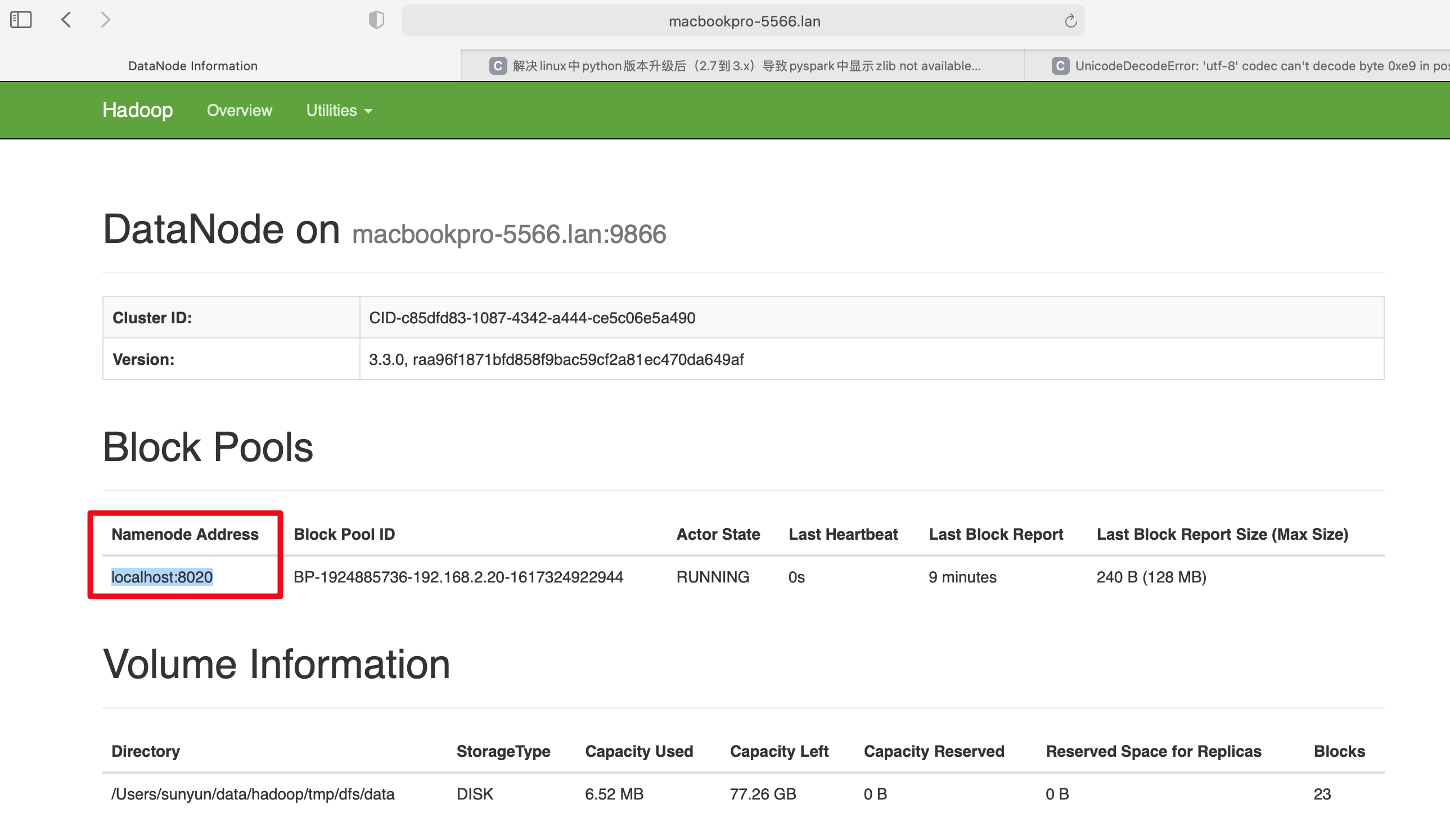

: java.io.IOException: Incomplete HDFS URI, no host: hdfs:/ml-100k/u.data解决1:

lines = sc.textFile("hdfs://localhost:8020/user/sunyun/ml-100k/u.data")问题2:

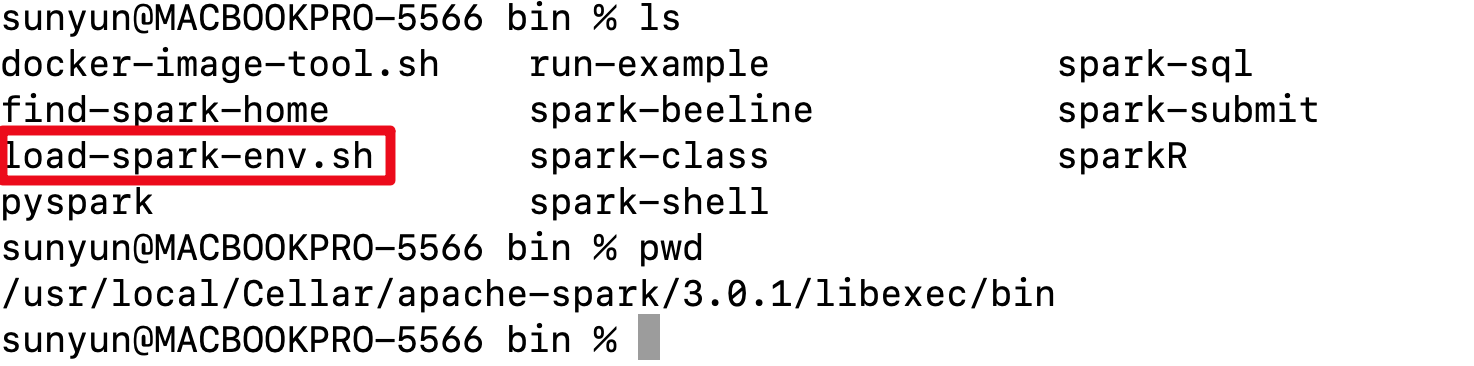

spark环境配置中的python版本与我手动升级后的python不一致

解决2:

修改/usr/local/Cellar/apache-spark/3.0.1/libexec/bin/load-spark-env.sh文件

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

SPARK_ENV_SH="spark-env.sh"

if [ -z "$SPARK_ENV_LOADED" ]; then

export SPARK_ENV_LOADED=1

export SPARK_CONF_DIR="${SPARK_CONF_DIR:-"${SPARK_HOME}"/conf}"

export PYSPARK_PYTHON=/usr/bin/python3

SPARK_ENV_SH="${SPARK_CONF_DIR}/${SPARK_ENV_SH}"

if [[ -f "${SPARK_ENV_SH}" ]]; then

# Promote all variable declarations to environment (exported) variables

set -a

. ${SPARK_ENV_SH}

set +a

fi

fi指定pyhton3版本 export PYSPARK_PYTHON=/usr/bin/python3

参考来自https://blog.csdn.net/weixin_43114954/article/details/113094958

问题3:

UnicodeDecodeError: ‘utf-8’ codec can’t decode byte 0xe9 in position 2892: invalid continuation byte

解决3:

import codecs

f1=codecs.open("fileName",'r',encoding = "ISO-8859-1")参考来自https://blog.csdn.net/ningzhimeng/article/details/78488822

python源码:

from pyspark import SparkConf, SparkContext

import codecs

def loadMovieNames():

movieNames = {}

with open("ml-100k/u.item",'r',encoding = "ISO-8859-1") as f:

for line in f:

fields = line.split('|')

movieNames[int(fields[0])] = fields[1]

return movieNames

def parseInput(line):

fields = line.split()

return (int(fields[1]), (float(fields[2]), 1.0))

if __name__ == "__main__":

conf = SparkConf().setAppName("WorstMovies")

sc = SparkContext(conf = conf)

movieNames = loadMovieNames()

lines = sc.textFile("hdfs://localhost:8020/user/sunyun/ml-100k/u.data")

movieRatings = lines.map(parseInput)

ratingTotalsAndCount = movieRatings.reduceByKey(lambda movie1, movie2: ( movie1[0] + movie2[0], movie1[1] + movie2[1] ) )

averageRatings = ratingTotalsAndCount.mapValues(lambda totalAndCount : totalAndCount[0] / totalAndCount[1])

sortedMovies = averageRatings.sortBy(lambda x: x[1])

results = sortedMovies.take(10)

for result in results:

print(movieNames[result[0]], result[1])

运行结果:

sunyun@MACBOOKPRO-5566 Downloads % spark-submit RatedMovieSpark.py

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/usr/local/Cellar/apache-spark/3.0.1/libexec/jars/spark-unsafe_2.12-3.0.1.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

21/05/20 09:54:20 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

21/05/20 09:54:20 INFO SparkContext: Running Spark version 3.0.1

21/05/20 09:54:21 INFO ResourceUtils: ==============================================================

21/05/20 09:54:21 INFO ResourceUtils: Resources for spark.driver:

21/05/20 09:54:21 INFO ResourceUtils: ==============================================================

21/05/20 09:54:21 INFO SparkContext: Submitted application: WorstMovies

21/05/20 09:54:21 INFO SecurityManager: Changing view acls to: sunyun

21/05/20 09:54:21 INFO SecurityManager: Changing modify acls to: sunyun

21/05/20 09:54:21 INFO SecurityManager: Changing view acls groups to:

21/05/20 09:54:21 INFO SecurityManager: Changing modify acls groups to:

21/05/20 09:54:21 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(sunyun); groups with view permissions: Set(); users with modify permissions: Set(sunyun); groups with modify permissions: Set()

21/05/20 09:54:21 INFO Utils: Successfully started service 'sparkDriver' on port 64230.

21/05/20 09:54:21 INFO SparkEnv: Registering MapOutputTracker

21/05/20 09:54:21 INFO SparkEnv: Registering BlockManagerMaster

21/05/20 09:54:21 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

21/05/20 09:54:21 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

21/05/20 09:54:21 INFO SparkEnv: Registering BlockManagerMasterHeartbeat

21/05/20 09:54:21 INFO DiskBlockManager: Created local directory at /private/var/folders/4k/dwx5pnzx3k596x3ct39tqskw0000gn/T/blockmgr-1eb573bd-b6c4-40b6-978d-c161bfc56bcd

21/05/20 09:54:21 INFO MemoryStore: MemoryStore started with capacity 434.4 MiB

21/05/20 09:54:21 INFO SparkEnv: Registering OutputCommitCoordinator

21/05/20 09:54:21 INFO Utils: Successfully started service 'SparkUI' on port 4040.

21/05/20 09:54:21 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://macbookpro-5566.lan:4040

21/05/20 09:54:22 INFO Executor: Starting executor ID driver on host macbookpro-5566.lan

21/05/20 09:54:22 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 64240.

21/05/20 09:54:22 INFO NettyBlockTransferService: Server created on macbookpro-5566.lan:64240

21/05/20 09:54:22 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

21/05/20 09:54:22 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, macbookpro-5566.lan, 64240, None)

21/05/20 09:54:22 INFO BlockManagerMasterEndpoint: Registering block manager macbookpro-5566.lan:64240 with 434.4 MiB RAM, BlockManagerId(driver, macbookpro-5566.lan, 64240, None)

21/05/20 09:54:22 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, macbookpro-5566.lan, 64240, None)

21/05/20 09:54:22 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, macbookpro-5566.lan, 64240, None)

21/05/20 09:54:22 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 175.8 KiB, free 434.2 MiB)

21/05/20 09:54:22 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 27.1 KiB, free 434.2 MiB)

21/05/20 09:54:22 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on macbookpro-5566.lan:64240 (size: 27.1 KiB, free: 434.4 MiB)

21/05/20 09:54:22 INFO SparkContext: Created broadcast 0 from textFile at NativeMethodAccessorImpl.java:0

21/05/20 09:54:23 INFO FileInputFormat: Total input files to process : 1

21/05/20 09:54:23 INFO SparkContext: Starting job: sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30

21/05/20 09:54:23 INFO DAGScheduler: Registering RDD 3 (reduceByKey at /Users/sunyun/Downloads/RatedMovieSpark.py:26) as input to shuffle 0

21/05/20 09:54:23 INFO DAGScheduler: Got job 0 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) with 2 output partitions

21/05/20 09:54:23 INFO DAGScheduler: Final stage: ResultStage 1 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30)

21/05/20 09:54:23 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

21/05/20 09:54:23 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

21/05/20 09:54:23 INFO DAGScheduler: Submitting ShuffleMapStage 0 (PairwiseRDD[3] at reduceByKey at /Users/sunyun/Downloads/RatedMovieSpark.py:26), which has no missing parents

21/05/20 09:54:24 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 11.4 KiB, free 434.2 MiB)

21/05/20 09:54:24 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 7.0 KiB, free 434.2 MiB)

21/05/20 09:54:24 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on macbookpro-5566.lan:64240 (size: 7.0 KiB, free: 434.4 MiB)

21/05/20 09:54:24 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1223

21/05/20 09:54:24 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 0 (PairwiseRDD[3] at reduceByKey at /Users/sunyun/Downloads/RatedMovieSpark.py:26) (first 15 tasks are for partitions Vector(0, 1))

21/05/20 09:54:24 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

21/05/20 09:54:24 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, macbookpro-5566.lan, executor driver, partition 0, NODE_LOCAL, 7378 bytes)

21/05/20 09:54:24 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, macbookpro-5566.lan, executor driver, partition 1, NODE_LOCAL, 7378 bytes)

21/05/20 09:54:24 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

21/05/20 09:54:24 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

21/05/20 09:54:24 INFO HadoopRDD: Input split: hdfs://localhost:8020/user/sunyun/ml-100k/u.data:989613+989613

21/05/20 09:54:24 INFO HadoopRDD: Input split: hdfs://localhost:8020/user/sunyun/ml-100k/u.data:0+989613

/usr/local/Cellar/apache-spark/3.0.1/libexec/python/lib/pyspark.zip/pyspark/shuffle.py:60: UserWarning: Please install psutil to have better support with spilling

warnings.warn("Please install psutil to have better "

/usr/local/Cellar/apache-spark/3.0.1/libexec/python/lib/pyspark.zip/pyspark/shuffle.py:60: UserWarning: Please install psutil to have better support with spilling

warnings.warn("Please install psutil to have better "

21/05/20 09:54:25 INFO PythonRunner: Times: total = 955, boot = 597, init = 38, finish = 320

21/05/20 09:54:25 INFO PythonRunner: Times: total = 867, boot = 505, init = 31, finish = 331

21/05/20 09:54:25 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 1857 bytes result sent to driver

21/05/20 09:54:25 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1857 bytes result sent to driver

21/05/20 09:54:25 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1651 ms on macbookpro-5566.lan (executor driver) (1/2)

21/05/20 09:54:25 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 1626 ms on macbookpro-5566.lan (executor driver) (2/2)

21/05/20 09:54:25 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

21/05/20 09:54:25 INFO PythonAccumulatorV2: Connected to AccumulatorServer at host: 127.0.0.1 port: 64243

21/05/20 09:54:25 INFO DAGScheduler: ShuffleMapStage 0 (reduceByKey at /Users/sunyun/Downloads/RatedMovieSpark.py:26) finished in 1.875 s

21/05/20 09:54:25 INFO DAGScheduler: looking for newly runnable stages

21/05/20 09:54:25 INFO DAGScheduler: running: Set()

21/05/20 09:54:25 INFO DAGScheduler: waiting: Set(ResultStage 1)

21/05/20 09:54:25 INFO DAGScheduler: failed: Set()

21/05/20 09:54:25 INFO DAGScheduler: Submitting ResultStage 1 (PythonRDD[6] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30), which has no missing parents

21/05/20 09:54:25 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 10.4 KiB, free 434.2 MiB)

21/05/20 09:54:25 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 5.8 KiB, free 434.2 MiB)

21/05/20 09:54:25 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on macbookpro-5566.lan:64240 (size: 5.8 KiB, free: 434.4 MiB)

21/05/20 09:54:25 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1223

21/05/20 09:54:25 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 1 (PythonRDD[6] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) (first 15 tasks are for partitions Vector(0, 1))

21/05/20 09:54:25 INFO TaskSchedulerImpl: Adding task set 1.0 with 2 tasks

21/05/20 09:54:25 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 2, macbookpro-5566.lan, executor driver, partition 0, NODE_LOCAL, 7143 bytes)

21/05/20 09:54:25 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 3, macbookpro-5566.lan, executor driver, partition 1, NODE_LOCAL, 7143 bytes)

21/05/20 09:54:25 INFO Executor: Running task 1.0 in stage 1.0 (TID 3)

21/05/20 09:54:25 INFO Executor: Running task 0.0 in stage 1.0 (TID 2)

21/05/20 09:54:25 INFO ShuffleBlockFetcherIterator: Getting 2 (15.2 KiB) non-empty blocks including 2 (15.2 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:25 INFO ShuffleBlockFetcherIterator: Getting 2 (16.0 KiB) non-empty blocks including 2 (16.0 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:25 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 15 ms

21/05/20 09:54:25 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 13 ms

21/05/20 09:54:25 INFO PythonRunner: Times: total = 19, boot = -626, init = 641, finish = 4

21/05/20 09:54:25 INFO PythonRunner: Times: total = 19, boot = -614, init = 629, finish = 4

21/05/20 09:54:25 INFO Executor: Finished task 1.0 in stage 1.0 (TID 3). 1808 bytes result sent to driver

21/05/20 09:54:25 INFO Executor: Finished task 0.0 in stage 1.0 (TID 2). 1808 bytes result sent to driver

21/05/20 09:54:25 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 3) in 119 ms on macbookpro-5566.lan (executor driver) (1/2)

21/05/20 09:54:25 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 123 ms on macbookpro-5566.lan (executor driver) (2/2)

21/05/20 09:54:25 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

21/05/20 09:54:25 INFO DAGScheduler: ResultStage 1 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) finished in 0.138 s

21/05/20 09:54:25 INFO DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job

21/05/20 09:54:25 INFO TaskSchedulerImpl: Killing all running tasks in stage 1: Stage finished

21/05/20 09:54:25 INFO DAGScheduler: Job 0 finished: sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30, took 2.105647 s

21/05/20 09:54:26 INFO SparkContext: Starting job: sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30

21/05/20 09:54:26 INFO DAGScheduler: Got job 1 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) with 2 output partitions

21/05/20 09:54:26 INFO DAGScheduler: Final stage: ResultStage 3 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30)

21/05/20 09:54:26 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 2)

21/05/20 09:54:26 INFO DAGScheduler: Missing parents: List()

21/05/20 09:54:26 INFO DAGScheduler: Submitting ResultStage 3 (PythonRDD[7] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30), which has no missing parents

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 10.3 KiB, free 434.2 MiB)

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 5.9 KiB, free 434.2 MiB)

21/05/20 09:54:26 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on macbookpro-5566.lan:64240 (size: 5.9 KiB, free: 434.4 MiB)

21/05/20 09:54:26 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1223

21/05/20 09:54:26 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 3 (PythonRDD[7] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) (first 15 tasks are for partitions Vector(0, 1))

21/05/20 09:54:26 INFO TaskSchedulerImpl: Adding task set 3.0 with 2 tasks

21/05/20 09:54:26 INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID 4, macbookpro-5566.lan, executor driver, partition 0, NODE_LOCAL, 7143 bytes)

21/05/20 09:54:26 INFO TaskSetManager: Starting task 1.0 in stage 3.0 (TID 5, macbookpro-5566.lan, executor driver, partition 1, NODE_LOCAL, 7143 bytes)

21/05/20 09:54:26 INFO Executor: Running task 0.0 in stage 3.0 (TID 4)

21/05/20 09:54:26 INFO Executor: Running task 1.0 in stage 3.0 (TID 5)

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Getting 2 (16.0 KiB) non-empty blocks including 2 (16.0 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Getting 2 (15.2 KiB) non-empty blocks including 2 (15.2 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

21/05/20 09:54:26 INFO PythonRunner: Times: total = 9, boot = -107, init = 111, finish = 5

21/05/20 09:54:26 INFO PythonRunner: Times: total = 9, boot = -108, init = 112, finish = 5

21/05/20 09:54:26 INFO Executor: Finished task 0.0 in stage 3.0 (TID 4). 2074 bytes result sent to driver

21/05/20 09:54:26 INFO Executor: Finished task 1.0 in stage 3.0 (TID 5). 2101 bytes result sent to driver

21/05/20 09:54:26 INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID 4) in 37 ms on macbookpro-5566.lan (executor driver) (1/2)

21/05/20 09:54:26 INFO TaskSetManager: Finished task 1.0 in stage 3.0 (TID 5) in 36 ms on macbookpro-5566.lan (executor driver) (2/2)

21/05/20 09:54:26 INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

21/05/20 09:54:26 INFO DAGScheduler: ResultStage 3 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) finished in 0.058 s

21/05/20 09:54:26 INFO DAGScheduler: Job 1 is finished. Cancelling potential speculative or zombie tasks for this job

21/05/20 09:54:26 INFO TaskSchedulerImpl: Killing all running tasks in stage 3: Stage finished

21/05/20 09:54:26 INFO DAGScheduler: Job 1 finished: sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30, took 0.069078 s

21/05/20 09:54:26 INFO SparkContext: Starting job: runJob at PythonRDD.scala:154

21/05/20 09:54:26 INFO DAGScheduler: Registering RDD 9 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) as input to shuffle 1

21/05/20 09:54:26 INFO DAGScheduler: Got job 2 (runJob at PythonRDD.scala:154) with 1 output partitions

21/05/20 09:54:26 INFO DAGScheduler: Final stage: ResultStage 6 (runJob at PythonRDD.scala:154)

21/05/20 09:54:26 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 5)

21/05/20 09:54:26 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 5)

21/05/20 09:54:26 INFO DAGScheduler: Submitting ShuffleMapStage 5 (PairwiseRDD[9] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30), which has no missing parents

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 11.4 KiB, free 434.1 MiB)

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 6.8 KiB, free 434.1 MiB)

21/05/20 09:54:26 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on macbookpro-5566.lan:64240 (size: 6.8 KiB, free: 434.3 MiB)

21/05/20 09:54:26 INFO SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1223

21/05/20 09:54:26 INFO DAGScheduler: Submitting 2 missing tasks from ShuffleMapStage 5 (PairwiseRDD[9] at sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) (first 15 tasks are for partitions Vector(0, 1))

21/05/20 09:54:26 INFO TaskSchedulerImpl: Adding task set 5.0 with 2 tasks

21/05/20 09:54:26 INFO TaskSetManager: Starting task 0.0 in stage 5.0 (TID 6, macbookpro-5566.lan, executor driver, partition 0, NODE_LOCAL, 7132 bytes)

21/05/20 09:54:26 INFO TaskSetManager: Starting task 1.0 in stage 5.0 (TID 7, macbookpro-5566.lan, executor driver, partition 1, NODE_LOCAL, 7132 bytes)

21/05/20 09:54:26 INFO Executor: Running task 0.0 in stage 5.0 (TID 6)

21/05/20 09:54:26 INFO Executor: Running task 1.0 in stage 5.0 (TID 7)

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Getting 2 (15.2 KiB) non-empty blocks including 2 (15.2 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Getting 2 (16.0 KiB) non-empty blocks including 2 (16.0 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

21/05/20 09:54:26 INFO PythonRunner: Times: total = 10, boot = -107, init = 111, finish = 6

21/05/20 09:54:26 INFO PythonRunner: Times: total = 10, boot = -106, init = 110, finish = 6

21/05/20 09:54:26 INFO Executor: Finished task 0.0 in stage 5.0 (TID 6). 1986 bytes result sent to driver

21/05/20 09:54:26 INFO Executor: Finished task 1.0 in stage 5.0 (TID 7). 1986 bytes result sent to driver

21/05/20 09:54:26 INFO TaskSetManager: Finished task 0.0 in stage 5.0 (TID 6) in 53 ms on macbookpro-5566.lan (executor driver) (1/2)

21/05/20 09:54:26 INFO TaskSetManager: Finished task 1.0 in stage 5.0 (TID 7) in 54 ms on macbookpro-5566.lan (executor driver) (2/2)

21/05/20 09:54:26 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool

21/05/20 09:54:26 INFO DAGScheduler: ShuffleMapStage 5 (sortBy at /Users/sunyun/Downloads/RatedMovieSpark.py:30) finished in 0.069 s

21/05/20 09:54:26 INFO DAGScheduler: looking for newly runnable stages

21/05/20 09:54:26 INFO DAGScheduler: running: Set()

21/05/20 09:54:26 INFO DAGScheduler: waiting: Set(ResultStage 6)

21/05/20 09:54:26 INFO DAGScheduler: failed: Set()

21/05/20 09:54:26 INFO DAGScheduler: Submitting ResultStage 6 (PythonRDD[12] at RDD at PythonRDD.scala:53), which has no missing parents

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_5 stored as values in memory (estimated size 8.4 KiB, free 434.1 MiB)

21/05/20 09:54:26 INFO MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 5.1 KiB, free 434.1 MiB)

21/05/20 09:54:26 INFO BlockManagerInfo: Added broadcast_5_piece0 in memory on macbookpro-5566.lan:64240 (size: 5.1 KiB, free: 434.3 MiB)

21/05/20 09:54:26 INFO SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1223

21/05/20 09:54:26 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 6 (PythonRDD[12] at RDD at PythonRDD.scala:53) (first 15 tasks are for partitions Vector(0))

21/05/20 09:54:26 INFO TaskSchedulerImpl: Adding task set 6.0 with 1 tasks

21/05/20 09:54:26 INFO TaskSetManager: Starting task 0.0 in stage 6.0 (TID 8, macbookpro-5566.lan, executor driver, partition 0, NODE_LOCAL, 7143 bytes)

21/05/20 09:54:26 INFO Executor: Running task 0.0 in stage 6.0 (TID 8)

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Getting 2 (10.9 KiB) non-empty blocks including 2 (10.9 KiB) local and 0 (0.0 B) host-local and 0 (0.0 B) remote blocks

21/05/20 09:54:26 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 1 ms

21/05/20 09:54:26 INFO PythonRunner: Times: total = 4, boot = -45, init = 48, finish = 1

21/05/20 09:54:26 INFO Executor: Finished task 0.0 in stage 6.0 (TID 8). 2026 bytes result sent to driver

21/05/20 09:54:26 INFO TaskSetManager: Finished task 0.0 in stage 6.0 (TID 8) in 22 ms on macbookpro-5566.lan (executor driver) (1/1)

21/05/20 09:54:26 INFO TaskSchedulerImpl: Removed TaskSet 6.0, whose tasks have all completed, from pool

21/05/20 09:54:26 INFO DAGScheduler: ResultStage 6 (runJob at PythonRDD.scala:154) finished in 0.038 s

21/05/20 09:54:26 INFO DAGScheduler: Job 2 is finished. Cancelling potential speculative or zombie tasks for this job

21/05/20 09:54:26 INFO TaskSchedulerImpl: Killing all running tasks in stage 6: Stage finished

21/05/20 09:54:26 INFO DAGScheduler: Job 2 finished: runJob at PythonRDD.scala:154, took 0.121859 s

Amityville: Dollhouse (1996) 1.0

Somebody to Love (1994) 1.0

Every Other Weekend (1990) 1.0

Homage (1995) 1.0

3 Ninjas: High Noon At Mega Mountain (1998) 1.0

Bird of Prey (1996) 1.0

Power 98 (1995) 1.0

Beyond Bedlam (1993) 1.0

Falling in Love Again (1980) 1.0

T-Men (1947) 1.0

21/05/20 09:54:26 INFO SparkContext: Invoking stop() from shutdown hook

21/05/20 09:54:26 INFO SparkUI: Stopped Spark web UI at http://macbookpro-5566.lan:4040

21/05/20 09:54:26 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/05/20 09:54:26 INFO MemoryStore: MemoryStore cleared

21/05/20 09:54:26 INFO BlockManager: BlockManager stopped

21/05/20 09:54:26 INFO BlockManagerMaster: BlockManagerMaster stopped

21/05/20 09:54:26 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/05/20 09:54:26 INFO SparkContext: Successfully stopped SparkContext

21/05/20 09:54:26 INFO ShutdownHookManager: Shutdown hook called

21/05/20 09:54:26 INFO ShutdownHookManager: Deleting directory /private/var/folders/4k/dwx5pnzx3k596x3ct39tqskw0000gn/T/spark-5643016b-451c-4892-86f7-74cf9335c16b

21/05/20 09:54:26 INFO ShutdownHookManager: Deleting directory /private/var/folders/4k/dwx5pnzx3k596x3ct39tqskw0000gn/T/spark-0d672059-499e-4f43-a211-87c1f8fa1b31/pyspark-cf615851-efaf-4bca-8a08-81c7ba12108c

21/05/20 09:54:26 INFO ShutdownHookManager: Deleting directory /private/var/folders/4k/dwx5pnzx3k596x3ct39tqskw0000gn/T/spark-0d672059-499e-4f43-a211-87c1f8fa1b31有效信息

Amityville: Dollhouse (1996) 1.0

Somebody to Love (1994) 1.0

Every Other Weekend (1990) 1.0

Homage (1995) 1.0

3 Ninjas: High Noon At Mega Mountain (1998) 1.0

Bird of Prey (1996) 1.0

Power 98 (1995) 1.0

Beyond Bedlam (1993) 1.0

Falling in Love Again (1980) 1.0

T-Men (1947) 1.0